The Epiphany

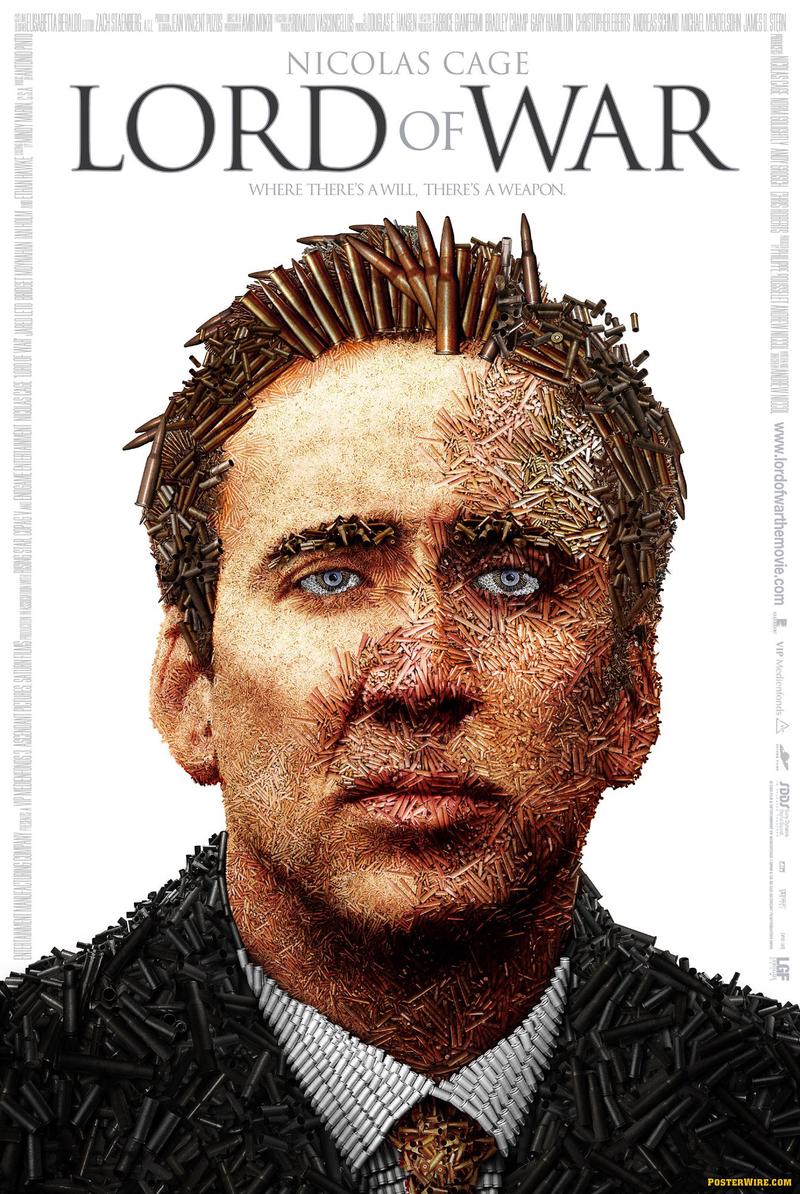

For the last few weeks, this Lord of War (2005) movie poster that shows Nicolas Cage’s face made of bullets has been renting space in my head. As I’ve been thinking about GPUs in the AI supply chain, my brain did a weird thing: it swapped in Jensen Huang for Nicolas Cage.

Lord of War (2005) poster

The reason the image of Jensen came to mind was because I cannot stop comparing the context of the movie to the present moment in the compute markets.

Before Cage became internet fodder for reaction GIFs, he was capable of genuine intensity. Lord of War (2005) which holds a 7.6 on IMDb and 62% on Rotten Tomatoes can be rated as one of his best works. In this film, Cage played Yuri Orlov, a fictionalized version of a real arms dealer named Viktor Bout who was nicknamed “The Merchant of Death.” He supplied weapons to dictators, rebel groups, and anyone who could pay.

Now think about what Cage’s character did in the film. He sat at the center of a network and supplied everyone. He was the connective tissue between conflict and the means to wage it.

Does that sound familiar?

The Lord of Tokens

The parallel is so obvious it almost feels lazy to point out. But sometimes the obvious connections are the most worth exploring.

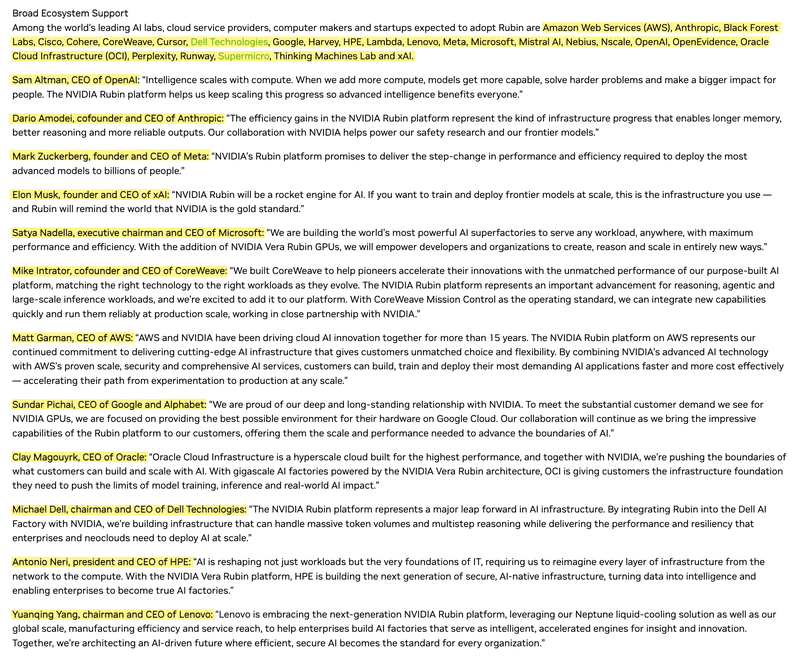

Every AI company, every research lab, every tech giant with AI ambitions is in an arms race. Not for weapons, but for compute. And there’s only one dealer in town: Jensen Huang’s Nvidia. Nvidia today occupies the position Yuri Orlov occupied in the arms trade. If you need evidence, you only have to look at the “Broad Ecosystem Support” section of Nvidia’s press release for its newest AI platform, Rubin. Remember, Rubin won’t be mass deployed for another year, yet the who’s who of tech is already effusive about it!

Nvidia Vera Rubin press release (CES 2026)

Jensen Huang, Nvidia’s co-founder and CEO, sits at the center of what may be the most consequential technology transition of our lifetime. Every major technology company, startup, research institution and government is attempting to acquire as many GPUs as possible. The supply chain has become a geopolitical concern and the balance of economic and military power may depend on access to cutting-edge silicon.

The Cost of Competence

When I first saw the Lord of War poster, I didn’t think about Nicolas Cage. I thought about the poster creation process. The poster, created by the design agency Art Machine, is a masterclass in compositing. A mosaic of bullets with thousands of individual shell casings, arranged to form a face. The lighting had to be consistent and the colors had to blend. The overall effect had to be both abstract and recognizable.

I started wondering: How would I deconstruct this if I had to teach someone to do it? A team of designers likely had to manually source images of bullets, match the lighting of the individual bullets with the shadows on the face and arrange thousands of shell casings to form a recognizable human face. It probably took them two to three weeks of intense, expensive labor that likely involved thousands of mouse clicks in Adobe Photoshop!

In 2005, that level of skill was rare which means that for Art Machine, this expertise was probably their moat. And because they could do this kind of work, they probably charged a premium for it.

The Experiment

With the image generation models today, a lot of the barriers in the creative space are being eroded. Now I can type a prompt into an interface and ask for any thought that comes to mind and AI models will attempt to fulfill my request within seconds.

This compression of skill is what I’m trying to understand. Decades of design expertise, distilled into a prompt and thousands of dollars of professional labor, reduced to an API call.

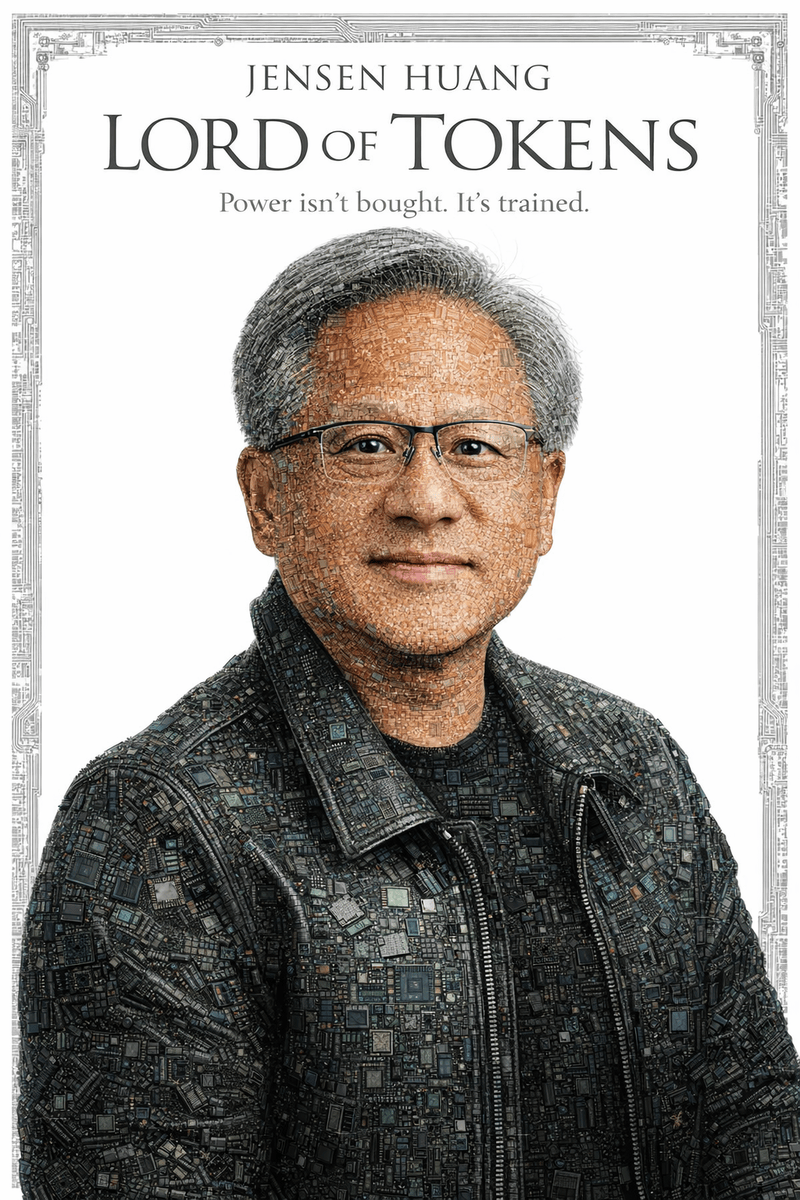

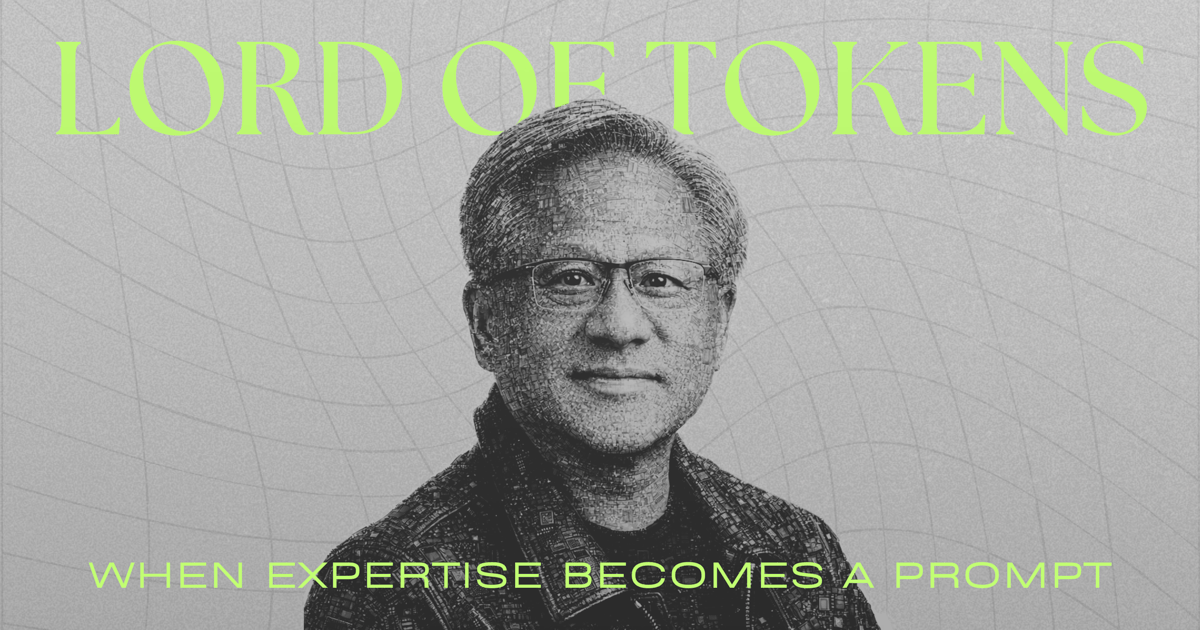

To test for this, here are the questions that came to mind: what if you took the Lord of War poster with its iconic, bullet mosaic and reimagined it for the GPU era? What if instead of bullets, it was GPU dies and silicon wafers constituting Jensen’s face? What if the title was Lord of Tokens instead of Lord of War? What if instead of the credits in the border, it was PCB traces?

Could AI do what a professional design agency spent two to three weeks doing in 2005?

The Setup

You might have already seen this Artificial Analysis “Image Editing” leaderboard for AI models. It’s getting crowded at the top with only a tiny 6% delta between the ELO scores of #1 and #5 model.

(image)

I wondered what does 6% even mean in the real world? My belief is that AI labs can game the benchmarks if everyone is playing the benchmaxxing game. The real test is whether two decades on from Lord of War (2005), can a model recreate Art Machine’s process? Can a model intuitively understand how to rearrange GPU dies and other silicon components into Jensen’s face?

The models

To get access to the latest models I used LMArena.ai. Although LMArena has numerous models for image generation, for this task I needed models that fit these requirements:

- Allow image to image editing

- Allow multiple reference images so that I could add original Lord of War poster and a profile picture of Jensen Huang to the prompt

Here are the models that fulfilled the above criteria:

| Model Name | Launched |

|---|---|

| OpenAI GPT Image 1 | April 2025 |

| Google Nano Banana (Gemini 2.5 Flash Image) | August 2025 |

| Google Nano Banana Pro (Gemini 3 Pro Image) | November 2025 |

| OpenAI GPT Image 1.5 | December 2025 |

| Bytedance Seedream 4.5 | December 2025 |

| Black Forest Labs Flux 2 [Max] | December 2025 |

| Reve V1.1 | December 2025 |

I’ve included the launch dates of these models to show how we’ve progressed through 2025 with models fit for this task. As you can see, this space with models available for this task suddenly got crowded towards the end of 2025.

The prompt (The torture test)

Here are the two reference images that were provided to the models:

I gave every model four tries to account for variance. The prompt was specific and difficult.

Task: Combine these images into a vertical movie poster parody.

Reference Inputs:

- Use Image 1 (Lord of War poster) for the composition, pose, lighting style, font placement, and aspect ratio.

- Use Image 2 (Jensen Huang profile photo) for the subject’s identity and facial features.

Subject & Material:

A photorealistic portrait of Jensen Huang posing exactly like the subject in Image 1. Instead of bullet casings, the subject’s face and leather jacket are constructed from a mosaic of computer chips, GPU dies, and silicon wafers. Use real-world knowledge to accurately depict the texture of computer hardware.Text Rendering:

Render the following text clearly, matching the font style from Image 1:

- Top Headline: “JENSEN HUANG”

- Main Title: “LORD OF TOKENS”

- Bottom Tagline: “POWER ISN’T BOUGHT. IT’S TRAINED.”

Style & Camera:

- Location/Background: Pure white background.

- Composition: A vertical portrait shot with high contrast.

- Details: Replace the border graphics with grey PCB circuit traces.

Side note: Why is this prompt a “torture test”?

Before we look at results, it’s important to understand what I’m testing and why this is a torture test for the models. Most standard benchmarks focus on single tasks: “remove the dog,” “change the background to Paris,” or “make the person smile.” This prompt requires the models to perform four distinct high-level tasks simultaneously.

- Semantic Material Swapping (The “Texture” Challenge): I’m not just asking for a face swap; I’m asking for a material swap. The model must understand the 3D volume of Jensen’s face but render it using the texture and geometry of “GPU dies and other silicon components.” Most models will fail here by either just pasting a normal photo of Jensen (ignoring the chips) or making a mess of chips that doesn’t look like Jensen.

- Specific Text Rendering (The “OCR” Challenge): Text is the Achilles’ heel of image generation. I have asked for three specific text updates (Title, Name, Tagline) while retaining the original poster’s serif font and styles (i.e. colors e.g. light grey for subtitles and tagline and black for “Lord” and “Token” ). If a model gets the face right, it often hallucinates the text, and vice versa.

- Identity Preservation: The model needs to pull specific facial features from the reference photo (Jensen) but heavily stylize them (Mosaic art). Balancing “likeness” with “heavy artistic filter” is a delicate weight balancing act for diffusion models.

- Fine-Grained Detail (PCB Traces): Asking to change a simple border to “PCB traces” tests the model’s ability to handle peripheral details without corrupting the main subject.

The Results

Below are the four outputs per model generated from the exact same prompt. I’m holding commentary until the end so you can judge them cleanly first.

Ordered from best to worst based on my preference.

1. OpenAI GPT Image 1.5

2. Google Nano Banana Pro

3. OpenAI GPT Image 1

4. All the rest

I consider the following models to have failed the test entirely. They disregarded the prompt instructions or produced unusable results, so I’m grouping them together:

Google Nano Banana

Reve v1.1

Black Forest labs Flux 2 [Max]

Bytedance Seedream 4.5

Performance comparison

Here’s how I scored the models across the specific capabilities I cared about.

Scoring rubric

- Each generated image gets a 1 (pass) or 0 (fail) for each test.

- Each model gets 4 attempts, so each test has a max score of 4.

- Scores are averaged across tests.

- The final average is normalized to a percentage for easier comparison.

- Tests:

- Jensen Likeness - Whether the output matches the input Jensen image

- Retain Cage pose - Whether the output image matches the original pose of the Nicolas Cage image*

- Chip mosaic detail - Whether the output image has artistic rendering and fine details on the chip mosaic*

- Text style transfer - Whether the output image Title/Name/Tagline font colors match the original poster*

- Text font transfer - Whether the output image text font matches the serif fonts of the original poster*

- PCB Traces Border - Whether the output image has an accurate PCB traces border*

| Model 👉 Test 👇 |

GPT Image 1.5 | Nano Banana Pro | Nano Banana | Seedream 4.5 | Flux 2 [Max] | GPT Image 1 | Reve V1.1 |

|---|---|---|---|---|---|---|---|

| Jensen Likeness | 4 | 4 | 0 | 3 | 0 | 0 | 2 |

| Retain Cage pose | 0 | 2 | 2 | 0 | 3 | 0 | 1 |

| Chip mosaic detail | 4 | 0 | 0 | 0 | 0 | 0 | 0 |

| Text style transfer | 2 | 0 | 1 | 0 | 0 | 0 | 0 |

| Text font transfer | 4 | 4 | 4 | 1 | 1 | 3 | 1 |

| PCB Traces Border | 4 | 4 | 2 | 4 | 3 | 3 | 2 |

| Overall | 75% | 58% | 38% | 33% | 29% | 25% | 25% |

My Thoughts

There is a perception that OpenAI has fallen behind Google in image generation. Seven months ago, OpenAI released GPT Image 1, and the internet exploded with the Studio Ghibli trend. Then Google dropped the “Nano Banana” family of models which were faster and generated more realistic images which made OpenAI look like a laggard. Going into 2026, OpenAI needed a solid answer to change the narrative forming in the market.

My test here proves that OpenAI has a solid model capable of replicating the work of a professional design agency (for more proof read the “Bonus Content” section).

The consensus is that GPT Image 1.5 still suffers from the “AI look” with faces that are too smooth, perfect and artificial. That is a fair critique but in this specific experiment, GPT Image 1.5 was the only model that achieved the goal.

Both GPT Image 1.5 and Nano Banana Pro preserved Jensen Huang’s facial features. But GPT Image 1.5 succeeded in the aesthetic execution. It understood the concept of scale: using miniature dies for facial texture and larger wafers for the jacket. This replicated the visual depth of the original Lord of War poster. Nano Banana Pro failed to do this, resulting in a flatter, less convincing mosaic.

The rest of the field is not competitive yet. But once one model solves a problem, the others usually follow quickly. I expect this to be a solved problem by late 2026. Perhaps at that time, I’ll write another blog with a different torture test :)

The implications

The interesting thing isn’t that AI can do this now. The interesting thing is what happens next. Because if you can recreate a two-week professional project in a single prompt, what can’t you do? And what does that mean for the people whose jobs used to be making things like this? Will we see fewer of them or will we see them exploring more experimental projects too costly to produce before?

I don’t have neat answers. But I know this: the gap between idea and execution is shrinking. We are entering an era where the supply of “creation” is infinite. The bottleneck is no longer the ability to make things; it’s the taste to know what is worth making. The question isn’t whether you can do something. It’s whether what you do matters.

Bonus content

How far can I push the image models?

I re-ran the test using the OpenAI GPT Image 1.5 prompt guide. The goal was to see if model-specific prompting could overcome the generic failures of the first round.

The difference was immediate as the new prompt generated significantly better results with finer details.

Generated using GPT 1.5 optimized prompt

Here is the GPT Image 1.5 optimized prompt I used.

Prompt

Create a movie poster parody combining the composition of Image 1 (Lord of War) with the subject of Image 2 (Jensen Huang).

Subject & Texture:

Replace the face of Nicolas Cage (Image 1) with the face of Jensen Huang (Image 2).

The subject’s face, skin, and leather jacket must be constructed entirely as a dense mosaic of computer chips, GPU dies, silicon wafers, and electronic components, mimicking the “bullet mosaic” style of Image 1.

Maintain the lighting and facial structure of Jensen (Image 2) but render it with the high-contrast, metallic texture of hardware.Text Replacement:

Replace the text verbatim using the same font style, size, color, and placement as Image 1:

- Top name: “JENSEN HUANG”

- Main Title: “LORD OF TOKENS”

- Bottom tagline: “POWER ISN’T BOUGHT. IT’S TRAINED.”

Details & Environment:

- Background: Pure white, same as Image 1.

- Border: Replace the original border elements with grey PCB circuit traces.

- Composition: Keep the centered portrait framing and vertical aspect ratio of Image 1.

A thought on mosaics and model parameters

There’s something almost sacred about the mosaic. Not in a religious sense, but in the sense of something that reveals a deeper truth about how complex things emerge from simple parts.

A mosaic takes fragments, shards, leftovers, pieces that are individually meaningless, and arranges them into something coherent, something that only makes sense when you step back and see the whole. It could be a face made of bullets or a floor made of colored stones or a portrait made of thousands of tiny squares of glass.

If you think deeply about it, isn’t that exactly what these AI models are doing too? They’re taking billions of parameters i.e. pieces of learned knowledge about what faces look like, what GPUs look like, how light falls on metal and arranging them into something new. The individual parameters don’t matter when processing a prompt. What matters is the arrangement, the way they fit together and the pattern that emerges from the chaos of training on the entire internet.

I don’t have a concrete conclusion to share but maybe a mosaic is the perfect metaphor for understanding machine intelligence: not a unified thing, but a trillion fragments, arranged just so that something intelligent emerges - “Mosaics of intelligence”.

-1.png)

-2.png)

-3.png)

-4.png)

-4.png)

-3.png)

-1.png)

-2.png)